GDPR compliancy is becoming more and more important. SAP has made a GDPR process available for the customer object in SAP Hybris Cloud for Customer. One issue with the standard GDPR process for Customers in SAP Hybris Cloud for Customer is that it does not take Tickets and Activities into account. Only Salesorders and Salesquotes are taken into account. So you could end up in a situation in which customer data is removed, while Tickets and Activities have recently been created or changed (and not yet completed). Obviously, this specific Customer data is still relevant and needs to stay available in the system.

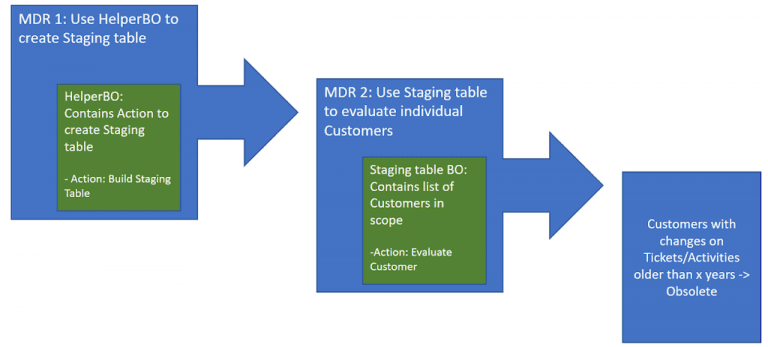

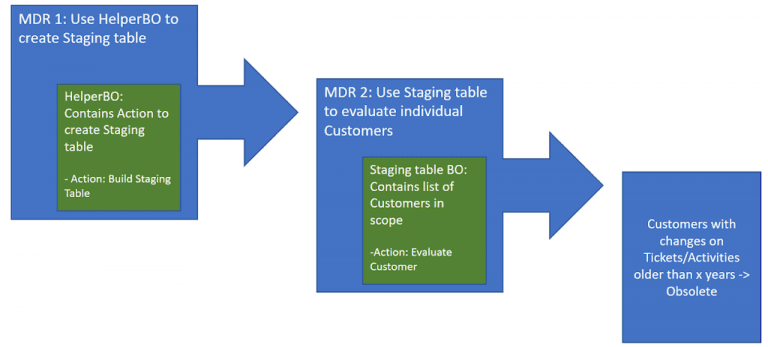

To avoid the situation described above, and take Tickets and Activities into account in our GDPR compliancy process, we can use Mass Data Runs (MDR). High level, the process would be to have a Mass Data Run to create a staging table with all Customers that are relevant for our process. A second MDR goes through the scoped Customers and evaluates them individually to see if there is recent activity for the specific Customer in Ticket and Activity objects. If this is not the case, the Customer is set to status Obsolete. If a Customer is in status Obsolete it can be further handled correctly in the standard GDPR compliancy process of SAP Hybris Cloud for Customer.

Mass Data Runs (MDR)

MDRs can be seen as jobs that you could run in the background. This makes MDRs ideal to process large amounts of data (hence the name).

MDRs are objects created in the PDI and consist of a Custom Business Object (BO) with the entries that need to be processed, and an action that contains the logic to process the entry. Additionally, there is the possibility to add selection criteria to specifically select certain entries to process.

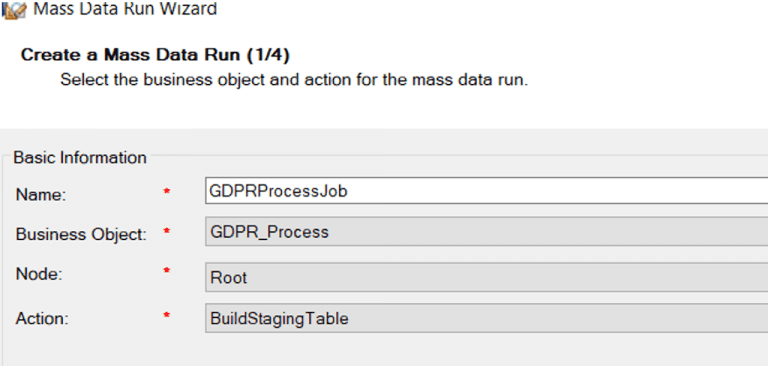

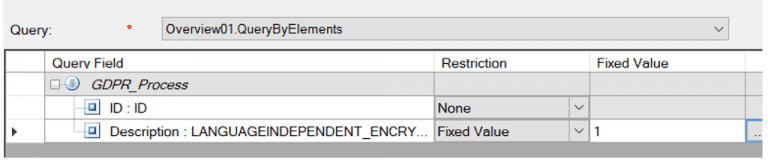

In our first MDR we make use of a HelperBO that contains nothing more than a single entry with an ID and a description. In our case this BO is called GDPR_Process. It also contains an action to build the staging table with the Customers relevant for our GDPR process. This action is called BuildStagingTable. It is also important to create a simple query, that selects all entries and all fields from our custom BO. Otherwise you will not be able to create an MDR for your custom BO. Lastly we create (standard) screens for our custom BO, so that we can create manual entries for our BO. (In the PDI: Right-click the Custom BO and select create screens).

Now we can create our MDR. In the PDI: Right-click in your solution -> Add -> New Item -> Mass Data Run. You specify the Custom BO information:

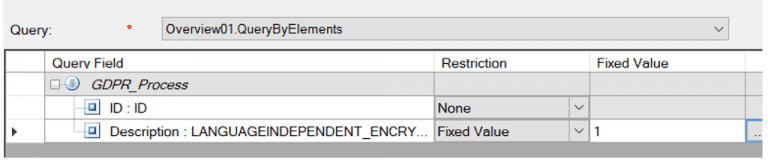

In the next tab you specify the selection criteria. This can be as simple as the following as the custom BO only needs to contain a single entry:

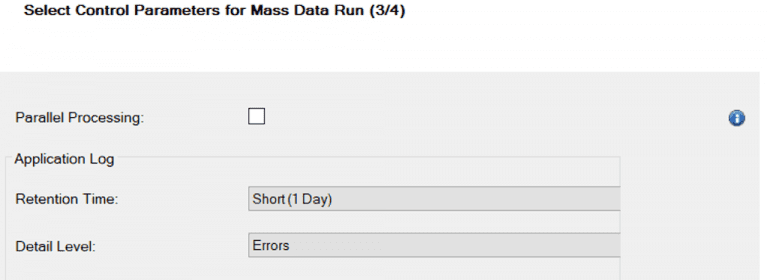

In the final tab you specify the level of detail in the job log, and how long messages should be retained. This can be left default.

In this tab there is also the option to flag for Parallel Processing. What this does is that it divides the set of entries in the custom BO that need to be processed in batches of 50 entries. The batches are then processed in parallel. Our MDR in particular is built for a custom BO with a single entry, so parallel processing is not required. (The MDR for processing the staging table and evaluating the customer would benefit a lot from parallel processing.)

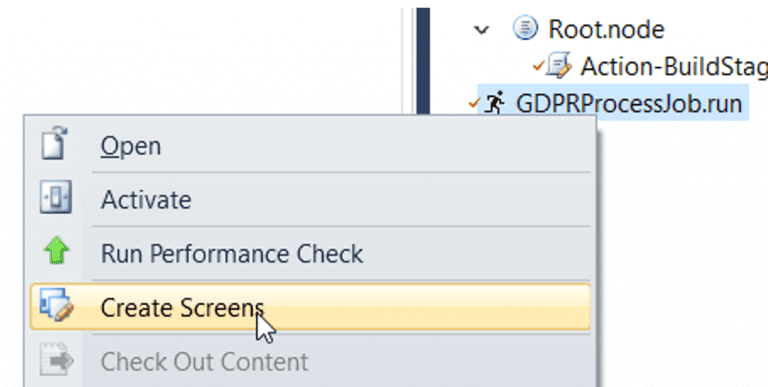

With the MDR built we still need to make screens for it, so that we can make it available in the UI:

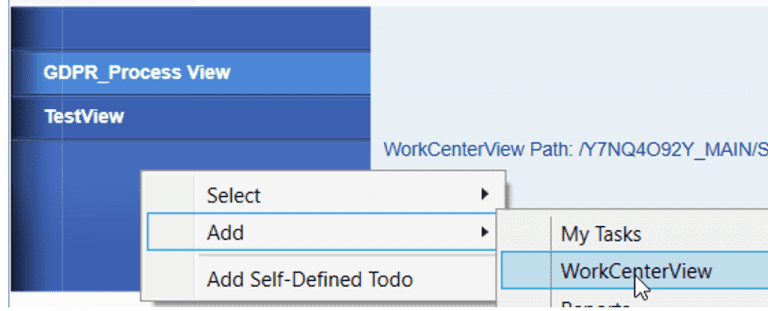

With the screens created we still need to add them to a Workcenter. It makes sense to add the MDR screen to the Workcenter of your custom BO. To do this, perform the following steps:

- Open the <custom BO name>.WCF.uiwoc screen in UI Designer.

- In the left pane we add a new workcenterview:

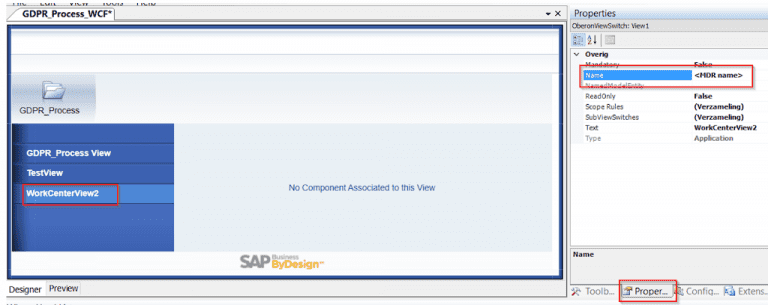

- Give the new Workcenter a logical name:

- In the Configuration Explorer, navigate to [Your Solution]_MAIN – >SRC – > [MDR name] _WCView

- Now click and drag this view into the central area of the floorplan saying “No Component Associated to this view”.

- Activate the floorplan.

In the UI it is important to assign the created views to relevant business roles so that the users have proper authorizations to view the screens.

In the UI we can now create entries for our custom BO and plan jobs for our MDR!

Avoid performance issues

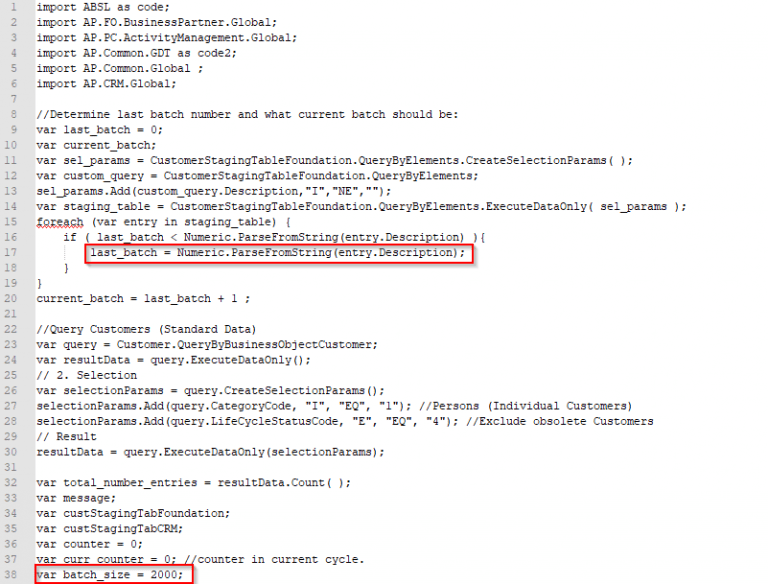

In MDRs performance can sometimes be an issue. There is limited memory allocated to the job so it is wise not to process to many customers per job run while building the staging table. For this reason, the action to build the staging table is set up such that it adds a certain batch size ( say 2000 customers) to the staging table. It also keeps track of the last batch number processed, so that for the next run, the action picks up from there:

As seen in the code of the Action above, the logic picks up from the last batch and keeps adding new entries to the staging table until the upper limit for current batch is reached.

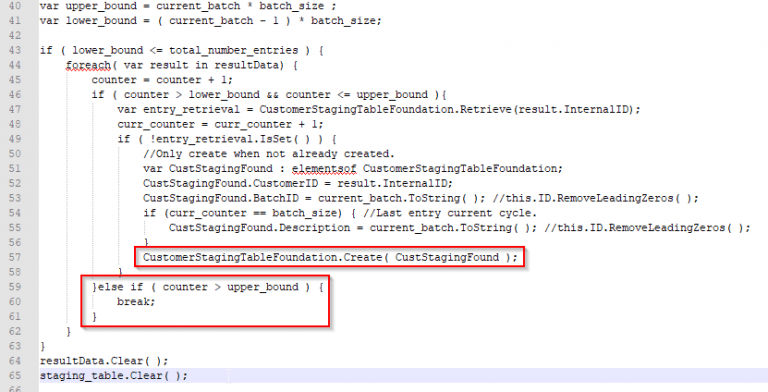

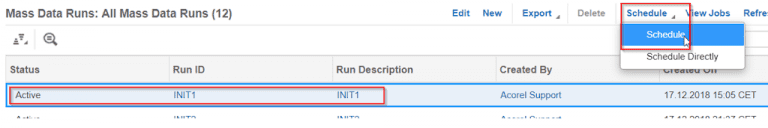

So with this logic we can plan jobs one after another (one for each batch for example). The logic in the Action will keep track for us where to start and where to end. In the UI you can plan jobs in “as a chain” as follows:

- Navigate to the workcenter for your MDR and create a new job. It will copy the selection criteria etc. from your MDR definition in the PDI.

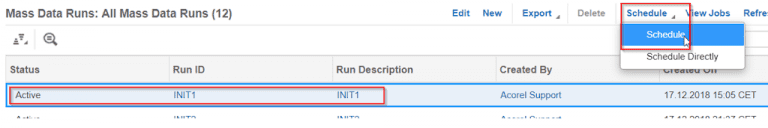

- Once the job is created schedule the first job:

Specify when the single run should be done and save.

- Now schedule a new job. But instead of the “Single Run” option, we choose the option “Run After Job” and we select the job we previously scheduled.

- Repeat these steps until you planned for enough batches to cover your set of Customers.

Working with batches allows you to break down the complete set of data into manageable batches. Working with a single large set of data is bad for the performance and can lead to exceptions in the backend. A common exception is the notorious RFC Exception Failure. Processing smaller datasets should avoid these kind of exceptions.

Recap

We created a custom BO with an action to build the staging table. Based on this custom BO we created an MDR object and its corresponding screens. The screens are added to the floorplan of our custom BO. The created screens are added to the relevant business roles. Because of the way our action is set up, we can now plan jobs in a “chained” way and minimize performance issues. Our staging table is built!

In order to evaluate the customers from our staging table a new MDR has to be created using the staging table as custom BO. The steps are similar to the ones described above. I will not describe the steps in detail, but here are some tips:

- The second MDR will be based on the staging table containing many entries. Use parallel processing in the MDR definition.

- In short, the action of the second MDR should select all tickets and tasks and check if for a specific customer all tickets and tickets are completed for example more than 3 years ago, and there are no open/active tickets anymore. Create library functions for these checks for reusability and making your code more readable.

- Test the action from your custom BO for example in an on-save event. This is easier. Once you feel it works to a certain degree, plan jobs. Planned jobs trigger the actions using the business user that planned them. So they can be debugged using breakpoints for this user. In the testing phase it might also be helpful to use tracing messages that will appear in the debug output.

- In case you need to perform changes in the Tickets and the Activities of a certain customer using MDR, note that both objects reside in different Deployment units! You will need a staging table for both deployment units. You could use Internal Communication objects for cross deployment object changes/creation. But debugging is almost impossible using Internal Communication objects.